Hadoop Development

Apache Hadoop can be useful across a range industries and for a multitude of use cases. Basically anywhere you need to be able to store, process, and analyze large even massive, amounts of data, it is up to the task. Use cases include analyzing social network relationships, predictive modeling of customer behavior in stores or online (e-commerce recommendations), automation of digital marketing, detecting and preventing fraud,intelligence gathering and interpretation, location-based marketing for mobile devices, call and utility detail analysis and much much more. Pwtech delivers enterprise level Hadoop development and data services to gain better insights from big data and much needed scalability, flexibility and cost effectiveness. We provide Hadoop solutions for large scale data storage and seamless processing as according to the clients’ requirements. Our Hadoop system architects are experts at generating high bandwidth clustered storage networks.

- Defining the applicable business use cases

- Technology assessment

- Determination of the right platform to integrate

- Evaluation of the business architecture

- Prototyping development

- Bench marking for performance

- Development on databases, data warehouses, cloud apps and hardware

- Deployments, admin tasks and performance monitoring by automation of development tools

- Building distributed systems to ensure scaling

- Re-engineering of apps for map-reduction

Request a quote

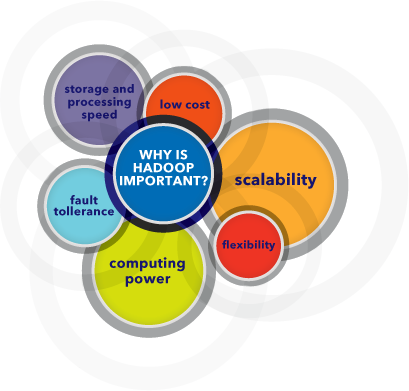

Why and where it is required

Hadoop is changing the perception of handling Big Data especially the unstructured data. Let’s know how Apache Hadoop software library, which is a framework, plays a vital role in handling Big Data. Apache Hadoop enables surplus data to be streamlined for any distributed processing system across clusters of computers using simple programming models. It truly is made to scale up from single servers to a large number of machines, each and every offering local computation, and storage space. Instead of depending on hardware to provide high-availability, the library itself is built to detect and handle breakdowns at the application layer, so providing an extremely available service along with a cluster of computers, as both versions might be vulnerable to failures.

About Hadoop

Hadoop is an open source software framework from Apache that enables companies and organizations to perform distributed processing of large data sets across clusters of commodity servers. Having to process huge amounts of data that can be structured and also complex or even unstructured, Hadoop possesses a very high degree of fault tolerance. It is able to scale up from a single server to thousands of machines, each offering local storage and computation. Instead of having to rely on high-end hardware to deliver high-availability, the software itself can detect and handle failures at the application layer, making the clusters of servers much more resilient even as they are prone to failures.

Reasons to hire hadoop experts with us

Our aim is to take your business to the heights of success and we go an extra mile to achieve our aim.

Enlisted below are some of the reasons why you must hire developers from us:

- We remain with our customers throughout the entire development cycle so that we are able to achieve their satisfaction.

- We thrive on the fact that the services we provide fit within the budget of our clients.

- Our developers are not only, well versed in the latest technologies but they also possess excellent English communication skills.

- With our experience, we are able to win the hearts of our clients all over the world.

Our Awesome Work

I’ve enjoyed working with clients of all sizes and profiles,located all over the world.Here are some of my latest projects. Every website we make is hand-crafted from scratch taking into consideration every client’s unique requirements,existing brand and personal taste.

I’ve enjoyed working with clients of all sizes and profiles,located all over the world. Here are some of my latest projects. Every website we make is hand-crafted from scratch taking into consideration every client’s unique requirements,existing brand and personal taste.

New in web development

Have Questions?

Testimonials

Hear success stories straight from our clients.

Kartik Manager – qatar-telp

Vikas CEO – Singapore-zonj